- Race Conditions

Race Conditions

Limit Overrun Race Conditions

The most well-known type of race condition enables you to exceed some kind of limit imposed by the business logic of the application. For example, consider an online store that lets you enter a promotional code during checkout to get a one-time discount on your order. To apply this discount, the application may perform the following high-level steps:

- Check that you haven’t already used this code.

- Apply the discount to the order total.

- Update the record in the database to reflect the fact that you’ve now used this code.

There are many variations of this kind of attack, including:

- Redeeming a gift card multiple times

- Rating a product multiple times

- Withdrawing or transferring cash in excess of your account balance

- Reusing a single CAPTCHA solution

- Bypassing an anti-brute-force rate limit Limit overruns are a subtype of so-called “time-of-check to time-of-use” (TOCTOU) flaws. Later in this topic, we’ll look at some examples of race condition vulnerabilities that don’t fall into either of these categories.

Detecting and Exploiting Limit Overrun Race Conditions with Burp Repeater

The process of detecting and exploiting limit overrun race conditions is relatively simple. In high-level terms, all you need to do is:

- Identify a single-use or rate-limited endpoint that has some kind of security impact or other useful purpose.

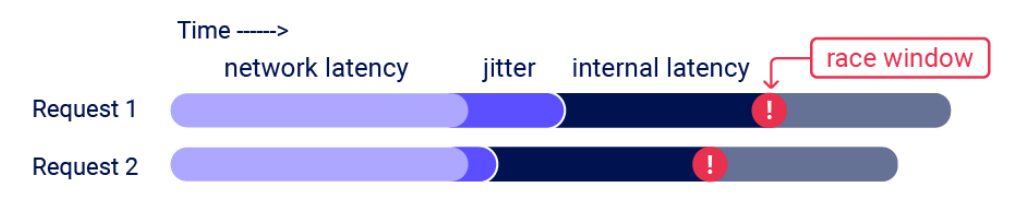

- Issue multiple requests to this endpoint in quick succession to see if you can overrun this limit. The primary challenge is timing the requests so that at least two race windows line up, causing a collision. This window is often just milliseconds and can be even shorter.

Even if you send all of the requests at exactly the same time, in practice there are various uncontrollable and unpredictable external factors that affect when the server processes each request and in which order.

Burp automatically adjusts the technique it uses to suit the HTTP version supported by the server:

- For HTTP/1, it uses the classic last-byte synchronization technique.

- For HTTP/2, it uses the single-packet attack technique, first demonstrated by PortSwigger Research at Black Hat USA 2023. The single-packet attack enables you to completely neutralize interference from network jitter by using a single TCP packet to complete 20-30 requests simultaneously.

Single-packet Attack

- Using repeater, we can duplicate and create a group of tabs.

- Setup our base request and then duplicate it like 19 times.

- We can then send group requests either in sequence or parallel (single-packet attack)

Exploiting Turbo Intruder

Turbo Intruder requires some proficiency in Python, but is suited to more complex attacks, such as ones that require multiple retries, staggered request timing, or an extremely large number of requests. To use the single-packet attack in Turbo Intruder:

- Ensure that the target supports HTTP/2. The single-packet attack is incompatible with HTTP/1.

- Set the engine=Engine.BURP2 and concurrentConnections=1 configuration options for the request engine.

- When queueing your requests, group them by assigning them to a named gate using the gate argument for the engine.queue() method.

- To send all of the requests in a given group, open the respective gate with the engine.openGate() method.

def queueRequests(target, wordlists): engine = RequestEngine(endpoint=target.endpoint, concurrentConnections=1, engine=Engine.BURP2 ) # queue 20 requests in gate '1' for i in range(20): engine.queue(target.req, gate='1') # send all requests in gate '1' in parallel engine.openGate('1')

Bypass Rate Limit on Login

- We deduced that the number of failed attempts per username must be stored server-side based on changing username allows more login attempts.

- Consider that there may be a race window between:

- When you submit the login attempt.

- When the website increments the counter for the number of failed login attempts associated with a particular username.

- Capture the request, highlight the password value and send it to the Turbo Intruder.

- We can use the race single packet attack example, with the final script looking like this:

def queueRequests(target, wordlists):

# as the target supports HTTP/2, use engine=Engine.BURP2 and concurrentConnections=1 for a single-packet attack

engine = RequestEngine(endpoint=target.endpoint,

concurrentConnections=1,

engine=Engine.BURP2

)

# assign the list of candidate passwords from your clipboard

passwords = wordlists.clipboard

# queue a login request using each password from the wordlist

# the 'gate' argument withholds the final part of each request until engine.openGate() is invoked

for password in passwords:

engine.queue(target.req, password, gate='1')

# once every request has been queued

# invoke engine.openGate() to send all requests in the given gate simultaneously

engine.openGate('1')

def handleResponse(req, interesting):

table.add(req)

- Looking at the responses, if we dont get a successful hit, we can try another user or wait for the account lock to reset. Also removing any passwords we know are wrong now.

- Look for any 302 responses to indicate a successful login attempt.

Hidden Multi-step Sequences

In practice, a single request may initiate an entire multi-step sequence behind the scenes, transitioning the application through multiple hidden states that it enters and then exits again before request processing is complete. We’ll refer to these as “sub-states”.

If you can identify one or more HTTP requests that cause an interaction with the same data, you can potentially abuse these sub-states to expose time-sensitive variations of the kinds of logic flaws that are common in multi-step workflows. This enables race condition exploits that go far beyond limit overruns.

For example, you may be familiar with flawed multi-factor authentication (MFA) workflows that let you perform the first part of the login using known credentials, then navigate straight to the application via forced browsing, effectively bypassing MFA entirely.

Example:

session['userid'] = user.userid

if user.mfa_enabled:

session['enforce_mfa'] = True

# generate and send MFA code to user

# redirect browser to MFA code entry form

As you can see, this is in fact a multi-step sequence within the span of a single request. Most importantly, it transitions through a sub-state in which the user temporarily has a valid logged-in session, but MFA isn’t yet being enforced. An attacker could potentially exploit this by sending a login request along with a request to a sensitive, authenticated endpoint.

Methodology

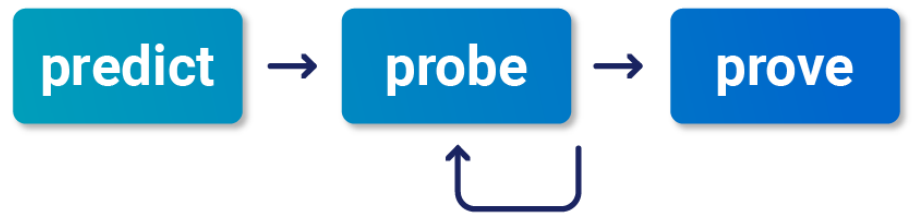

To detect and exploit hidden multi-step sequences, we recommend the following methodology, which is summarized from the whitepaper Smashing the state machine: The true potential of web race conditions by PortSwigger Research.

- Predict Potential Collisions

- Is this endpoint security critical? Many endpoints don’t touch critical functionality, so they’re not worth testing.

- Is there any collision potential?</b> For a successful collision, you typically need two or more requests that trigger operations on the same record. For example, consider the following variations of a password reset implementation:

- Probe for Clues

- To recognize clues, you first need to benchmark how the endpoint behaves under normal conditions. You can do this in Burp Repeater by grouping all of your requests and using the Send group in sequence (separate connections) option. For more information, see Sending requests in sequence.

- Next, send the same group of requests at once using the single-packet attack (or last-byte sync if HTTP/2 isn’t supported) to minimize network jitter. You can do this in Burp Repeater by selecting the Send group in parallel option. For more information, see Sending requests in parallel. Alternatively, you can use the Turbo Intruder extension, which is available from the BApp Store.

- Anything at all can be a clue. Just look for some form of deviation from what you observed during benchmarking. This includes a change in one or more responses, but don’t forget second-order effects like different email contents or a visible change in the application’s behavior afterward.

- Prove The Concept

- Try to understand what’s happening, remove superfluous requests, and make sure you can still replicate the effects.

- Advanced race conditions can cause unusual and unique primitives, so the path to maximum impact isn’t always immediately obvious. It may help to think of each race condition as a structural weakness rather than an isolated vulnerability. NOTE: For a more detailed methodology, check out the full whitepaper: Smashing the state machine: The true potential of web race conditions

Multi-endpoint Race Conditions

Perhaps the most intuitive form of these race conditions are those that involve sending requests to multiple endpoints at the same time. Think about the classic logic flaw in online stores where you add an item to your basket or cart, pay for it, then add more items to the cart before force-browsing to the order confirmation page. A variation of this vulnerability can occur when payment validation and order confirmation are performed during the processing of a single request. The state machine for the order status might look something like this:

Aligning Multi-endpoint Race Windows

When testing for multi-endpoint race conditions, you may encounter issues trying to line up the race windows for each request, even if you send them all at exactly the same time using the single-packet technique. This common problem is primarily caused by the following two factors:

- Delays introduced by network architecture - For example, there may be a delay whenever the front-end server establishes a new connection to the back-end. The protocol used can also have a major impact.

- Delays introduced by endpoint-specific processing - Different endpoints inherently vary in their processing times, sometimes significantly so, depending on what operations they trigger.

Bug Culture Wiki

Bug Culture Wiki